Keeping Cool Under Pressure

Josh Schaap

January 26, 2016

- Categories:

- Tags:

How to Keep a Data Center Cool

Sometimes, industry predictions come true with stunning accuracy.

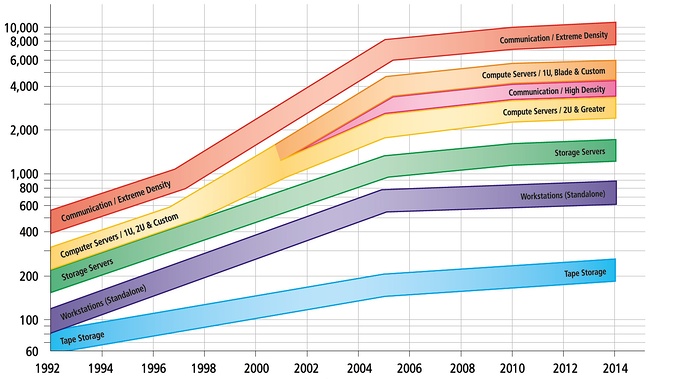

Take, for example, this graph, produced way back in 2005 by the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE).

What you’re looking at is a long-term forecast of heat load by footprint, specifically made for the IT datacenter and the telecom market. Today, the upper limit of air-cooled compute infrastructures sits right at 4.5kW / ft^2, which aligns nicely with ASHRAE’s prediction. Call it a self-fulfilling prophecy or call it an advancement in cooling and liquid immersion systems, the end result is a slow, but steady rise in compute densities over time.

As we’ve mentioned before, thermal considerations are just one of a number of factors data center operators have to contend with, but it’s an important one because an overheated data center is an expensive one. A recent study from the Ponemon Institute shows that the average cost per minute of unplanned downtime is $7,900. With outages averaging 86 minutes each, you’re looking at some seriously expensive downtime – to the tune of $700,000 each incident.

One way to combat overheating is by employing computational fluid dynamics software tools, which are used to monitor how cooling infrastructures are performing compared with the data center’s distributed heat loads. These tools are designed to ensure optimal performance from your hot aisle and cold aisle containment systems. They also help point out where you might be able to redeploy assets so you can take full advantage of their cooling capacities.

Still, some data center managers operate at the high end of allowable inlet air temperature ranges in order to reach lower PUE and cooling costs, while at the same time requiring that their temperature monitoring and control systems adjust quickly to changing conditions. As you can imagine, this comes at a cost, namely the possibility for a thermal runaway. Server Technology has an answer to this dilemma in its High Density Outlet Technology PDUs, which monitor both power consumption and temperature within cabinets. Their built-in alerts and reporting functions transmit data to HVAC systems, DCIM tools, and IT personnel, providing a true “last line of defense.” The HDOT can also operate at full power load in a 65 ̊C (149 ̊ F) environment, allowing the data center to run at a warmer ambient temperature.

As the Power Strategy Experts, Server Technology is here to help you stay powered, be supported, and get ahead. We encourage you to learn more about our HDOT PDUs, which put the power of remote monitoring of power and temperature back in your hands.

Thanks for your submission. One of our Power Strategy Experts will get back to you shortly.